A Roadmap for CIOs to Turn AI Budgets into ROI

AI pilots stall before delivering value. This guide shows CIOs a six step roadmap to focus budgets on high-ROI workflows and measurable outcomes.

In our previous blog post we outlined a way to get unstuck from the 95% problem.

However, every CIO we speak with today faces the same tension.

Budgets for AI innovation are real. The pressure from Boards and CEOs is real. But the roadmap? That’s often unclear. To restate an excerpt from the MIT report

📊 95% of enterprise AI pilots fail to scale. Enterprises lead in pilot counts but rarely make it past proof-of-concept. Mid-market firms, by contrast, move from pilot to production in just 90 days.

Why the gap? Not lack of interest, not lack of spend. It’s the absence of an actionable roadmap that balances ambition with execution.

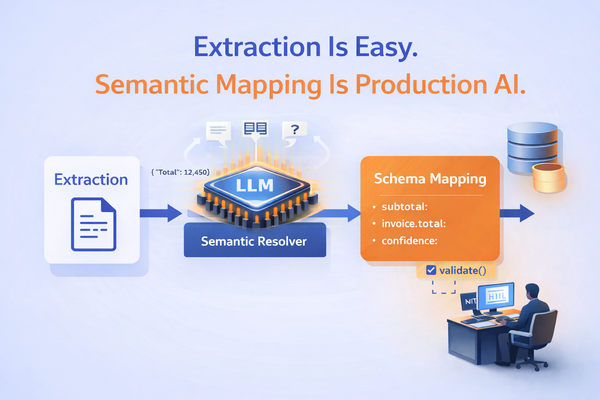

This blog is a playbook for CIOs and Innovation leaders who want to get on the right side of what MIT calls the GenAI Divide.

Step 1. Start with the Right Opportunity Lens

The default instinct for many CIOs is to allocate AI budgets to Sales & Marketing. It makes sense — KPIs are easy to measure: demos booked, leads generated, email open rates. More importantly, it's easier to sell to the CEO and the Board.

But here’s the catch:

💡 MIT found that 50% of AI budgets go to Sales & Marketing, while the highest ROI often comes from overlooked cost centers like finance, operations, and compliance.

These functions may not make headlines, but they are where structural efficiency and bottom-line savings are unlocked. MIT’s data shows that organizations crossing the GenAI Divide are achieving outsized returns not by chasing customer-facing pilots, but by transforming the processes nobody talks about in board meetings.

Back-office automation delivers tangible, measurable gains:

- Eliminating BPO contracts → customer support operations and document-processing centers can be replaced by agentic systems, saving millions annually.

- Reducing agency spend → AI-driven content generation, legal document review, and contract analysis can cut reliance on external providers by 20–30%.

- Accelerating risk and compliance checks → automated policy enforcement and transaction monitoring reduce errors, violations, and audit risk.

- Cutting cycle times in finance and procurement → invoice processing, AP/AR reconciliation, and vendor risk scoring can shift from weeks to hours.

These aren’t speculative. MIT documents cases where enterprises achieved $2–10M in annual savings by retiring BPO contracts, cutting creative agency costs, and automating finance operations. In many cases, these are static workflows managed purely by human intervention (mostly on excel sheets) that are begging to be reimagined with AI.

Map your AI portfolio beyond the front office.

Ask which cost centers could AI convert from drags on margin into drivers of efficiency?

Step 2. Don’t Overlook Shadow AI — Harness It

Most CIOs are focused on official pilots — budgeted, approved, and carefully monitored. But the reality is that your employees are already running dozens of “unofficial pilots” every day. MIT calls this the shadow AI economy: workers using ChatGPT, Claude, or Copilot on personal accounts to automate repetitive parts of their jobs.

The scale is astonishing: while only 40% of companies had purchased official AI subscriptions, 90% of knowledge workers reported daily use of personal AI tools for work tasks. From drafting contracts to generating customer emails to crunching data summaries, shadow AI is already transforming workflows.

Most enterprises respond with fear — restricting usage or banning tools altogether. But forward-thinking organizations (and our experience tells us that its the mid-size companies treat it as a boon, not a threat. Shadow AI gives you real-time visibility into:

- Where value is already being created (look at which tasks employees are automating most often).

- Which employees are your power users (they can become champions in official rollouts).

- What expectations workers now have (flexibility, iteration, speed — not rigid enterprise tools).

Your employees are already running the first wave of AI pilots. Use that signal.

- Identify power users experimenting with personal AI tools

- Bring them into roadmap conversations

- Reward this behavior and formalize high-value use cases into secure, enterprise pilots

Step 3. Avoid the Trap of Building In-House

When new technology waves arrive, enterprise instinct is often to build. It feels safer, more controlled, more aligned with security and compliance. Frankly it seems easier since the friction of evaluating a bunch of vendors is high. But MIT’s research is blunt: internal builds fail twice as often as external partnerships.

Why? Because building AI systems isn’t like building traditional software. Success requires:

- Dedicated teams who can iterate rapidly — not distracted by BAU (business-as-usual) projects.

- Specialized expertise in LLMs, prompt engineering, evaluation frameworks, and fine-tuning.

- Long feedback loops where systems break before they scale.

Enterprises rarely have the bandwidth or patience. MIT found that many CIOs poured months into internal builds, only to end up with brittle, underperforming tools their employees abandoned in favor of ChatGPT.

Meanwhile, external partners brought battle-tested playbooks from dozens of deployments, cutting timelines from nine months to 90 days.

Build only where differentiation is mission-critical. For the rest, adopt a bias toward partnerships. Bring in experts who have already climbed the learning curve so you don’t spend your innovation budget relearning painful lessons.

Step 4. Procure Like a BPO Client, Not a SaaS Buyer

Traditional enterprise procurement habits don’t work in GenAI. Many CIOs still evaluate AI vendors like SaaS providers: by feature lists, license costs, and demo quality. MIT’s research shows this is the wrong playbook. The most successful buyers treat AI like business process outsourcing (BPO), not SaaS.

That means:

- Customization is table stakes. A one-size-fits-all tool won’t survive your workflows.

- Business outcomes are the metric. Don’t ask, “What model do you use?” Ask, “How will this reduce my compliance cost or cycle time in 90 days?”

- Partnership, not purchase. AI deployments are co-evolutions — they need vendors who stay engaged, learn, and adapt with you.

MIT found that buyers who took this BPO-style approach had double the success rate of those who procured AI like software licenses.

When talking to vendors or co-building partners, replace the question “What features do you have?” with “How will this reduce my spend / cycle time / compliance risk in 90 days?”

Step 5. Build for Execution Beyond Pilots

Why do 95% of AI pilots fail to scale? Because most are designed to impress in isolation, not integrate into workflows. Pilots succeed in the lab but collapse in the wild.

MIT found the most common failure points were:

- Brittle workflows — the tool can’t handle real-world exceptions.

- Lack of integration — outputs don’t connect to core systems (CRM, ERP, case management).

- Weak governance — compliance teams block rollouts because auditability wasn’t built in.

Pilots that scale are different. They are built with execution in mind from day one. They plan for:

- Data readiness (clean, varied, secure, accessible).

- Governance (audit trails, privacy, compliance baked in).

- Integration (seamless fit into existing systems).

- Measurement (KPIs tied to P&L impact, not vanity metrics).

Think of the pilot as the first brick. The execution plan is the bridge. Without the bridge, the brick sits alone, impressive but useless.

Refuse pilots that can’t answer the scale questions upfront. Every pilot should have a roadmap to production.

Step 6. Shift Portfolio Toward Learning-Capable Systems

MIT’s research pinpoints the root cause of the GenAI Divide: most enterprise tools don’t learn, adapt, or remember. Employees happily use ChatGPT for quick drafts but won’t trust static enterprise tools for mission-critical work.

The missing ingredient is learning capability. Tools need memory, adaptability, and feedback loops. This is where agentic AI comes in: systems designed to orchestrate multi-step workflows, retain context, and improve over time.

Early adopters are already proving this:

- Accounts Payable / Receivable Agents cutting month-end close cycles from weeks to days.from weeks to days.

- Procurement & Vendor Agents automatically flagging compliance and policy risks before contracts are signed.

- Loan underwriting agents parsing borrower documents, and flagging deviations and discrepancies, cutting down decision time to minutes instead of days.

These aren’t demos — they’re production systems that adapt as they’re used.

Audit your AI portfolio. If your tools can’t learn or adapt, they’re doomed to stall. Invest in agentic, learning-capable systems that grow with your workflows instead of forgetting them.

An Actionable Roadmap for CIOs

Here’s the distilled playbook:

- Reframe opportunities: Look beyond Sales & Marketing to back-office automation

- Harness Shadow AI: Study employee usage as a leading indicator

- Bias toward partnerships: Don’t waste time on fragile internal builds

- Procure like BPO: Demand customization, outcome-based contracts, and partnership

- Plan for scale: Bake in governance, integration, and metrics from day one

- Invest in learning-capable systems: Adopt tools that adapt and remember

Where Agami Fits In

So how do CIOs make this work for their context: we have outlined the principles, but turning them into a customized roadmap is the challenge.

At Agami, we partner with CIOs to:

- Map your AI portfolio against high-ROI opportunities

- Identify 2–3 quick-win use cases in back-office or operations

- Formalize shadow AI usage into enterprise-grade pilots

- Build a pilot-to-production roadmap you can take to your CEO or Board

We don’t just drop in tools. We co-design a roadmap that balances ambition with execution.

Accelerating the 6 steps: Book a Free AI Opportunity Assessment

If you’re sitting on an AI budget and don’t want to become another statistic in the 95% of failed pilots, now is the time to act. Block a slot for the most useful 30 minutes you will spend thinking through your roadmap.

Book your complementary session

Frequently Asked Questions

1. Why do most enterprise AI pilots fail to scale?

According to MIT’s State of AI in Business 2025 report, 95% of AI pilots fail because they are designed as isolated experiments without integration, governance, or measurable ROI goals. Pilots often succeed in labs but collapse when scaled to enterprise workflows.

2. What is the GenAI Divide?

The GenAI Divide refers to the gap between organizations that experiment with AI but fail to achieve business impact, versus those that successfully integrate AI into workflows. Enterprises often stall, while mid-market companies move faster from pilot to production with clear ROI.

3. Where should CIOs focus AI budgets for the highest ROI?

While many CIOs direct AI budgets to Sales and Marketing, the highest ROI is often found in back-office functions such as finance, procurement, operations, and compliance. These cost centers deliver measurable efficiency gains by reducing BPO spend, cutting cycle times, and ensuring compliance.

4. What is Shadow AI, and how should CIOs handle it?

Shadow AI refers to employees using personal tools like ChatGPT or Claude to automate work without IT approval. Rather than suppressing it, CIOs should treat Shadow AI as a roadmap, learning from these grassroots use cases and formalizing them into secure, enterprise-grade pilots.

5. Should enterprises build AI solutions in-house or partner externally?

MIT research shows internal builds fail at twice the rate of external partnerships. In-house efforts often lack the specialized skills, time, and iteration cycles required. Partnering with experienced vendors accelerates deployment, ensures customization, and reduces risk.

6. How can organizations measure the success of AI initiatives?

Success should be measured by business outcomes, not just usage metrics. Key measures include reduced cycle times, lower BPO or agency costs, faster financial close, improved compliance, and overall impact on P&L performance.

7. What role does agentic AI play in crossing the GenAI Divide?

Agentic AI systems address the core enterprise gap by adding memory, adaptability, and orchestration to workflows. Examples include accounts payable agents, procurement risk agents, and compliance agents that learn and improve over time, enabling true production-grade adoption.