Context Engineering

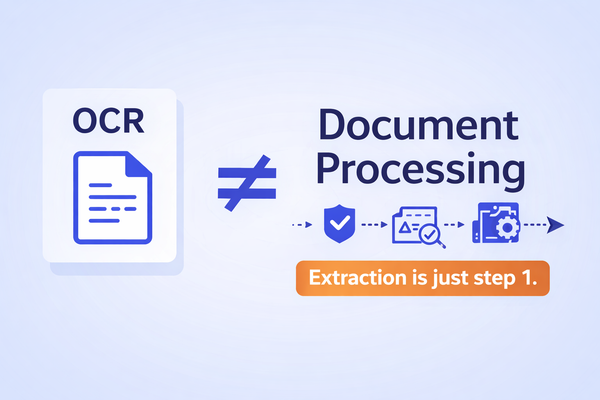

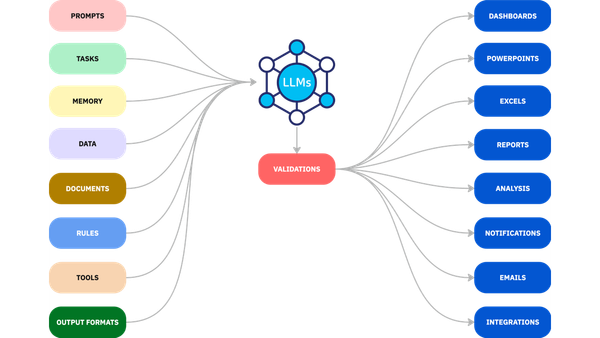

Document Processing: Validation is Plan A

Most document AI systems fail after extraction. This deep dive explains why validation breaks on an example invoice pipeline and how to fix it.

Learn more about how Agami uses context engineering to achieve high accuracy and reliability. Design retrieval pipelines that ground AI outputs in your enterprise knowledge and compliance rules for accuracy and trust.

Context Engineering

Most document AI systems fail after extraction. This deep dive explains why validation breaks on an example invoice pipeline and how to fix it.

Document Intelligence Agent

Document processing isn’t just LLMs and OCR. Learn how validation, normalization, and workflows make AI production-ready.

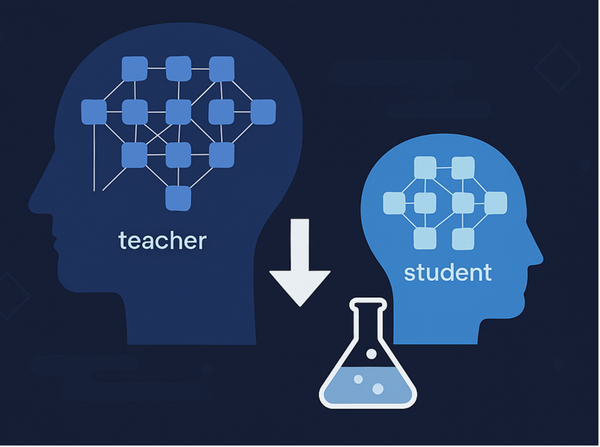

Private LLM

How Agami turned a large, costly AI model into a lean, production-ready asset for enterprise use.

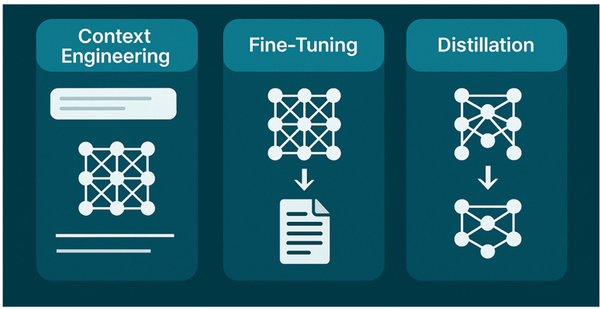

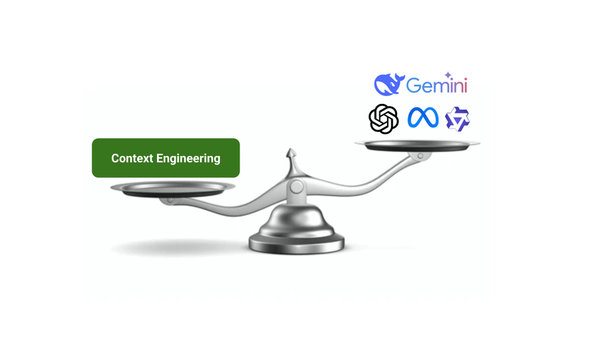

Context Engineering

Choosing between context engineering, fine-tuning, and distillation is key to making LLMs work in production. This guide explains each approach, when to use them, their trade-offs, and how to balance cost, accuracy, and scalability.

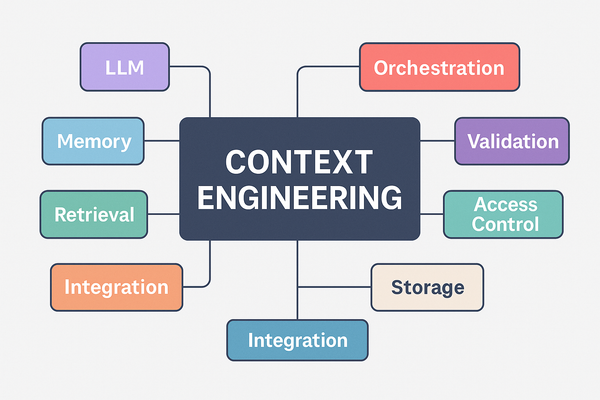

Context Engineering

What does it take to make Context Engineering work in production? In Part 3, we break down the platform components, team setup, key pitfalls, and the real tradeoffs between building your own stack or buying a platform like Agami.

Context Engineering

Part 2 of our 3 part Context Engineering series. Discover why Context Engineering matters more than selecting the best LLM. Learn how structured context dramatically improves AI reliability.

Context Engineering

Part 1 of a 3-part series from Agami on context engineering—the real engine behind reliable AI. We break down what it is, why it matters more than model choice, and how it powers production-scale outcomes.