Unlocking the Future: The Critical Importance of Private AI for Enterprises

Privacy is not a nice-to-have for regulated industries. It is the difference between experimenting with AI and deploying it into production with confidence. Leaders who own security, compliance, and uptime know the path to value looks very different when sensitive data is on the line.

The enterprise AI fork: public SaaS AI versus private LLMs

Public AI tools deliver fast experimentation, but they operate as shared, multi-tenant services with limited control. For many enterprises, that creates blockers related to data movement, logging, and risk approvals. A private LLM stack is purpose-built for environments where confidentiality, audit, and deterministic controls are non-negotiable.

Think of this as two roads. One is a public endpoint with broad capability and shared guardrails. The other is a dedicated environment that lives in your network, honors your security policies, and integrates with your identity, observability, and data governance stack. If you are considering a private LLM path, explore the Private LLM service hub that focuses on deploying large language models privately in your VPC or on-prem with enterprise-grade security, compliance, and control.

What makes Private AI different

Private deployments are designed to meet enterprise expectations for confidentiality and control. They run inside your boundary, integrate with your IAM, and provide full observability and change management. This approach reduces exposure, aligns to risk frameworks, and creates a foundation for scale across business units.

Deployment architecture that aligns with your boundary

On-prem AI deployment places models and data pipelines in your data center, giving your teams physical and logical control. VPC AI keeps systems inside your cloud account with private networking, no public internet paths, and peering to existing services. In both cases, you can enforce controlled access and data governance, use your own KMS, and ensure data residency and retention meet policy.

Isolation choices matter. You can containerize per workload, run dedicated GPU pools per department, and restrict egress to approved endpoints. These decisions make it easier to validate that inference data is not retained for training, and that prompts, completions, and embeddings remain within your environment.

Security and compliance as first-class requirements

Regulated sectors must prove that AI workloads comply with established controls. For health data, that may mean HIPAA safeguards, encryption at rest and in transit, and documented BAAs. For financial data and credit policies, teams need rigorous access control, traceable approvals, and complete audit trails. For legal confidentiality, the standard is clear, no unintended disclosure, full privilege protection, and restricted sharing.

This is where enterprise AI compliance becomes operational. You need policy-aware routing, data redaction, content filtering, and logging that is both tamper-evident and reviewable. When the controls are embedded into the platform, you reduce reliance on manual process and accelerate risk sign-off for new use cases. For some organizations, this is the only way to deliver HIPAA-compliant AI for enterprises without compromising velocity.

Cost realities leaders care about

Public tools make cost simple, but not always predictable at scale. Per-token pricing, network egress, and API rate limits can combine in ways that surprise finance teams as usage grows. Private deployments shift the model toward capacity planning and sustained utilization, which can lower unit costs for steady workloads.

Right-sizing capacity is the lever. You can select models that fit your tasks, deploy quantized variants, and use model distillation to boost throughput on commodity hardware. Retrieval reduces prompt sizes and recurring token usage, and caching answers lowers spend on repeated queries. For steady-state operations, GPU sharing and batching increase utilization and improve overall economics.

Latency and performance in production

Inference latency often comes from network distance, cold starts, and serialization overhead. In a private setup, co-locating models with data sources and applications trims round trips and stabilizes SLOs. Streaming responses, token-level prefill, and prompt caching improve user experience and reduce perceived wait time.

There is also operational latency. With internal orchestration, you manage autoscaling thresholds, set token-per-second budgets, and route requests to the right model for the job. The outcome is a predictable response profile, fewer timeouts, and better performance on high-volume tasks like classification, extraction, and summarization.

Where SaaS tools stall and how Private AI unlocks adoption

Many enterprises reach a ceiling with public tools due to vendor risk, data residency constraints, and DLP policies. Approvals for sensitive datasets are slow or not granted, and teams are forced into synthetic or scrubbed data that limits value. Rate limits and shared tenancy also make it hard to serve internal workloads with strict SLOs.

A private approach unblocks these issues. You can adopt AI at scale behind the firewall, tie model access to business roles, and enforce content policies uniformly. Sensitive datasets remain in place, inference logs are controlled, and approvals move faster because controls are built into the platform itself. This is the practical path for SaaS vs private AI decisions where compliance and control are decisive.

The governance model that leaders expect

Governance turns AI from pilot to platform. It covers who can do what, with what data, and under which policy constraints. It also ensures you can prove it later to auditors and customers.

- Identity integration with SSO and role-based access control

- Encrypted storage with customer-managed keys and per-tenant isolation

- Data retention policies with configurable redaction and deletion windows

- Prompt and completion logging for audit with fine-grained controls

- Evaluation workflows for safety, accuracy, and bias before production

- Clear SLOs, error budgets, and rollback paths for model and prompt changes

When these capabilities are standard, product teams build faster and security teams maintain confidence. This balance is how enterprises scale beyond one-off experiments into durable, supported AI services.

Operating model choices for resilient AI

Platform teams face decisions about model selection, orchestration, and integration patterns. A tiered model catalog supports varied use cases, from small fast models for routing and extraction to larger models for reasoning and generation. Policy-aware routing decides when to escalate to larger models or to apply retrieval steps for grounding.

Observability is the backbone. Real-time dashboards, alerts on drift and cost spikes, and correlation with application metrics keep systems healthy. A formal evaluation loop catches regressions as prompts evolve, knowledge bases change, or user behavior shifts. Together, these practices establish a reliable operating model for AI in production.

Data locality, retrieval, and the accuracy problem

Accuracy improves when models are grounded in enterprise context. Retrieval augmented generation connects internal knowledge, documents, and data services to the model so answers are specific, current, and explainable. Citation-backed outputs and traceable sources support review processes and reduce the chance of hallucinations slipping into critical workflows.

Data locality matters for both speed and policy. Keeping retrieval pipelines in the same region or data center reduces hops and meets residency requirements. This pattern is essential for workloads that touch health records, credit data, legal briefs, and other sensitive sources.

Team enablement without sacrificing control

Engineering leaders must empower product teams while maintaining standards. A shared platform with guardrails lets teams ship AI features without reinventing permissions, logging, and evaluations. Templates for common tasks like extraction, summarization, and report generation reduce time to value.

With this model, security and compliance become multipliers rather than blockers. Central teams set the rules, provide toolkits, and monitor outcomes, while product teams focus on user needs. The result is faster delivery and fewer exceptions for audits and reviews.

Signals that you are ready for a private LLM stack

Several indicators suggest it is time to formalize your approach. Your pilot use cases rely on sensitive data. You face growing review cycles for new AI features. Your team needs predictable latency and dedicated throughput. You must prove controls to regulators, customers, and internal auditors.

If these resonate, a private stack will streamline approvals, improve performance, and create a shared foundation for multiple units. It aligns your AI roadmap to the same rigor applied to core systems. Most importantly, it lets you move from isolated experiments to pervasive capabilities with measurable impact.

How Agami makes private deployments practical and enterprise-ready

Agami helps enterprises deploy AI in their own environment with the controls, performance, and reliability that regulated industries require. Below is how our agents and services address the challenges outlined above, and how they integrate with your stack to deliver outcomes quickly.

Agents

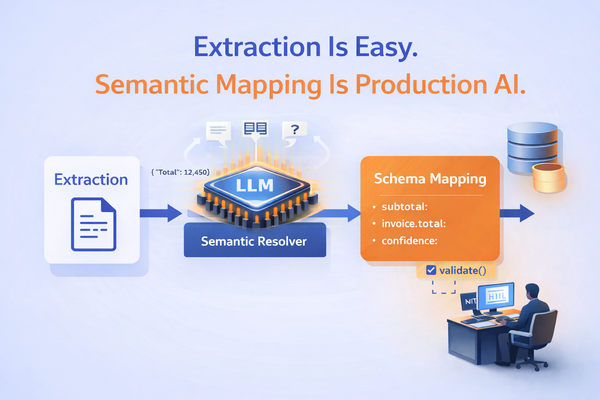

- Document Intelligence Agent: Extract and normalize data from PDFs, scans, spreadsheets, and images, then pass clean, structured outputs to downstream systems. This shrinks manual review time for forms, claims, invoices, and KYC packages, and keeps sensitive fields inside your network for policy enforcement.

- Voice Agent: Transcribe, summarize, and analyze calls securely to create structured intelligence. Run speech processing privately to meet call recording rules and reduce exposure of customer PII while improving service quality.

- Insights Agent: Turn structured and unstructured data into decision-ready insights, benchmarks, and risk alerts. Ground outputs in your warehouses and document stores to improve accuracy and auditing.

- Research Agent: Combine internal knowledge with trusted external sources to produce accurate, client-ready research. Use policy-aware retrieval to ensure only approved sources are included.

- Document Composer Agent: Assemble compliant memos and reports automatically from templates and structured data. This accelerates workflows like credit write-ups and clinical summaries while maintaining consistent language and formatting.

- Dashboard Builder Agent: Convert raw data into interactive dashboards for real-time KPIs, risk metrics, and compliance indicators. Integrate with your observability stack so AI metrics sit alongside operational metrics.

Services

- Private LLM: Deploy models in your VPC or on-prem with encryption, RBAC, and full control over data flow. This service focuses on enterprise security, compliance, and operational control, which makes it easier to win approvals and scale.

- Large Language Model Operations (LLMOps): Securely deploy, monitor, and govern models with dashboards, alerts, and compliance controls. Establish versioning, rollback, and change management to keep production stable.

- Context Engineering: Design retrieval pipelines that ground outputs in your knowledge and policies. This raises answer quality and provides traceability for reviewers.

- Model Fine Tuning and Distillation: Adapt models to your domain and optimize for speed and cost. Use smaller, efficient models for high-volume tasks without sacrificing accuracy.

- Retrieval Augmented Generation: Combine internal and approved external sources to deliver grounded, citation-backed outputs. Reduce hallucinations and support reviewer workflows with verifiable context.

- AI Evaluation & Testing: Measure accuracy, safety, and compliance before production. Automated tests and benchmarks give stakeholders confidence and reduce risk.

Putting it all together

Enterprises succeed when AI operates like any other mission-critical system. That means clear ownership, measurable SLOs, strong security, and predictable cost. The platform handles identity, policy, and guardrails so teams can build features that matter to customers and regulators.

With the right foundation, you can move quickly while staying aligned to policy. Sensitive data stays inside your boundary, latency improves, and governance becomes part of the workflow rather than an afterthought. This is the path to sustained adoption and business impact.

Explore more in our Private LLM hub, where we cover Deploy large language models privately in your VPC or on-prem, ensuring enterprise-grade security, compliance, and control over sensitive data.

Read to get started? Talk to Us

Public SaaS AI tools operate as shared services with limited control, making them less suitable for sensitive data environments. Private LLMs, however, provide dedicated environments within your network that honor security policies and offer enterprise-grade controls. Private AI deployments run inside your organization's boundaries, integrate with your Identity Access Management (IAM), and provide full observability. This design minimizes data exposure and aligns with compliance frameworks, making confidentiality a priority. On-prem AI deployment allows organizations to have physical and logical control over data models and pipelines. This setup enables you to enforce controlled access, utilize your own key management system (KMS), and ensure data residency and retention meet policy standards. A private LLM stack integrates compliance controls directly into the platform, which simplifies approval processes, reduces reliance on manual work, and accelerates the risk sign-off for new AI use cases, especially in regulated industries. Private AI deployments offer predictable costs through capacity planning and sustained utilization, compared to variable costs associated with public tools. This allows for better financial management as AI usage grows and provides opportunities for optimized resource allocation. Organizations needing to work with sensitive data, facing lengthy review cycles for AI features, requiring predictable latency, or needing to demonstrate compliance controls to regulators are indications that a private LLM stack is needed. Agami provides a suite of agents and services designed for secure and compliant AI deployments, helping enterprises leverage private language models adapted for their specific environments while integrating seamlessly with existing systems.Frequently Asked Questions

1. What is the difference between public SaaS AI and private LLMs?

2. How do private AI deployments ensure data confidentiality?

3. What are some benefits of on-prem AI deployment?

4. How does a private LLM stack streamline compliance?

5. What are the cost advantages of private AI deployments?

6. What key indicators suggest an enterprise is ready for a private LLM stack?

7. How does Agami facilitate private AI deployments?