Why Most Generative AI Pilots Fail (and How to Be in the 5% That Succeed)

The State of AI in Business 2025 Report found that 95% of generative AI pilots fail to deliver ROI. This post summarizes the report’s findings and shares Agami’s perspective on how to design pilots that scale into production.

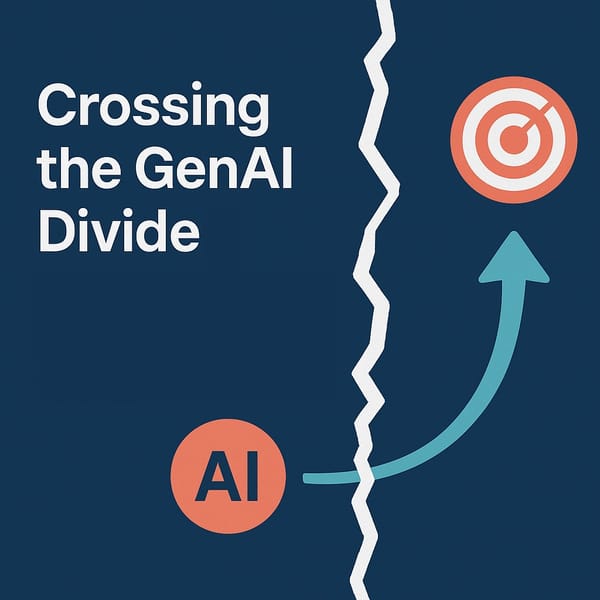

Introduction: The GenAI Divide

The State of AI in Business 2025 Report, by MIT Nanda Institute, reveals a sobering reality: despite $30–40 billion in generative AI investment, 95% of enterprise pilots fail to deliver measurable ROI.

This gap is what the report calls the GenAI Divide. Employees are embracing consumer tools like ChatGPT and Copilot, but only 5% of enterprise pilots scale into production.

In this post, we summarize the report’s findings and add Agami’s own lessons on how to avoid becoming part of the 95%.

What the Report Reveals: The GenAI Divide in Numbers

- High investment, low returns → Despite billions spent, most pilots stall before scaling.

- Adoption ≠ transformation → Individual productivity gains do not translate into enterprise-wide impact.

- Four patterns of the divide:

- Limited disruption → Only a handful of sectors (Tech & Media) show real transformation.

- Enterprise paradox → Large firms run the most pilots but succeed the least.

- Investment bias → Companies over-index on flashy sales and marketing pilots while underinvesting in back-office workflows.

- Implementation advantage → External partnerships succeed at twice the rate of internal-only builds.

We're in very early days of GenAI adoption with many enterprises experimenting with the new technology, so these results are not entirely surprising.

We often see enterprises picking “shiny” use cases for prototyping because they make for great boardroom slides, but not because they solve urgent problems. The best ROI often comes from unglamorous, back-office workflows such as credit memos, claims, or compliance where inefficiency is measurable.

Why Pilots Fail: The Learning Gap

The report highlights that the biggest barrier is not regulation or infrastructure, but learning.

- No memory or adaptation → Pilots do not retain context, improve with feedback, or integrate into workflows.

- Shadow AI vs. official AI → Employees succeed with consumer tools in their personal workflows, while sanctioned enterprise pilots stall.

- Top barriers identified:

- User resistance and lack of adoption.

- Poor model performance when applied to specialized domains.

- Lack of workflow integration, with models remaining demos instead of embedded processes.

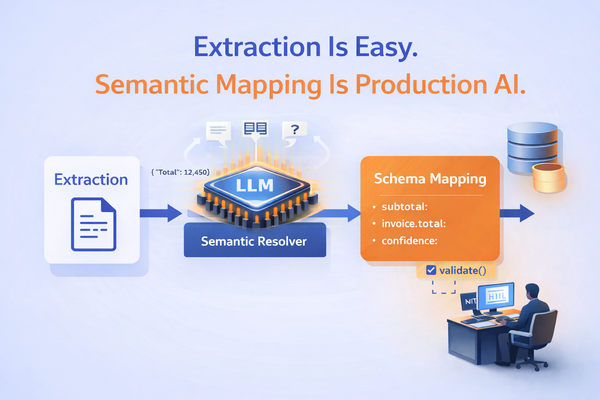

Building a demo or prototype is deceptively easy, but building a production-ready system is a different challenge. Production means uptime, monitoring, compliance, and integration with existing systems. Many pilots underestimate this gap and collapse when IT or compliance teams get involved.

Partnership matters. Teams that work with the right external partner are twice as likely to reach production and ROI.

Five Myths the Report Busts

- “AI will replace most jobs soon.”

Layoffs are limited, with most impact in outsourced roles. - “Generative AI is transforming business.”

Adoption is high, but transformation is rare. - “Enterprises are slow to act.”

Enterprises are running pilots aggressively, but they are not scaling. - “Model quality is the main blocker.”

The bigger issue is workflow integration and learning, not raw model performance. - “The best enterprises are building their own.”

Internal builds fail twice as often as externally partnered efforts.

We would add a sixth myth. Top-down mandates are assumed to accelerate adoption, but they often force teams to pick problems that are not suited to AI just to show progress. Building a culture of innovation and bottom-up pilots anchored in real pain points scale more successfully.

What Successful Pilots Do Differently

The report found that the small number of successful enterprises:

- Demand workflow-specific customization.

- Partner externally, doubling their success rate.

- Empower line managers and frontline champions instead of relying only on central labs.

- Focus on back-office automation where ROI is real, such as claims, CAMs, and compliance.

Our experience reinforces this. The best pilots are grounded in existing workflows with clear metrics, and are co-owned by the business and IT. When the people who feel the problem daily drive the solution, adoption follows naturally.

How to Avoid Failure: Agami’s Playbook

From Agami’s work with enterprises, here is a repeatable playbook:

- Define success upfront and tie pilots to measurable KPIs (time saved, error rate reduced, ROI).

- Anchor in an existing pain point, such as CAM preparation, claims backlogs, or contract review.

- Pick problems that fit AI’s strengths: transforming unstructured into structured, synthesizing across sources, repetitive analysis.

- Design for compliance and privacy from day one with private LLMs, VPC deployments, and governance controls.

- Build evaluation-driven development with accuracy, safety, and compliance checks at every stage.

- Integrate into workflows. Do not run isolated demos, test inside live business processes.

- Partner to accelerate. Treat AI vendors as partners, not just software providers, to shorten the path to production.

Conclusion: Crossing the Divide

The State of AI in Business 2025 Report shows that 95% of pilots fail, but the failures are predictable and avoidable.

The enterprises that succeed:

- Pick the right problems.

- Define clear success criteria.

- Build for compliance and integration.

- Partner to accelerate.

The report’s most striking finding is that partnerships matter. Enterprises that work with the right external partners are twice as likely to succeed. We have seen this firsthand. Having an experienced partner to design, evaluate, and productionize AI makes the difference between being in the 95% and joining the winning 5%.

If you are planning or stuck in an AI pilot, let’s talk. We will help you avoid the 95% and join the winning 5%.