What Are Private LLMs and Why Enterprises Need Them

Private LLMs empower enterprises to use generative AI securely, on-premise or in a private cloud. Fine-tuned on internal data, they deliver accurate, compliant insights while protecting sensitive information from third-party exposure or data leaks.

Introduction

Enterprise AI adoption is no longer a “someday” initiative — it’s happening now. And large language models (LLMs) are leading the charge.

They summarize reports in seconds, draft proposals, analyze documents, and even surface insights you didn’t know you had. But for organizations handling sensitive data, public models come with real risks.

🔹 Data privacy issues

🔹 Regulatory uncertainty

🔹 Opaque infrastructure and usage policies

For enterprises where trust, compliance, and data control matter, public models often hit a wall.

That’s where Private LLMs (Private Large Language Models) come in — a better fit for the privacy-first, governance-driven reality of enterprise AI deployment.

What Is a Private LLM?

A Private LLM is an enterprise AI model deployed in your own environment — either on-premises or in a private cloud (VPC). It provides full control over how your data is processed, stored, and protected.

Unlike public AI platforms like ChatGPT, Gemini, or Claude, private LLMs don’t transmit your queries or documents to external servers. They run inside your secure infrastructure, tailored to your business context.

Key Characteristics of a Private LLM:

🔹 Built on open-source LLM frameworks (e.g. Llama, Mistral, Falcon)

🔹 Fine-tuned on internal datasets and enterprise knowledge

🔹 Integrated into existing workflows and systems

🔹 Controlled by your own privacy and security protocols

If your organization is serious about AI privacy, data residency, and customization, a Private LLM is the model architecture that gives you both power and peace of mind.

Why Enterprises Are Moving to Private LLMs

Enterprise LLM use cases are maturing, and so are the expectations around how they’re deployed. Let’s break down the four biggest drivers behind this shift:

Data Privacy and Sovereignty

Public LLM APIs process inputs off-premises — sometimes even in other countries. That’s fine for casual use, not for sensitive customer data, IP, or compliance-bound content.

With a private LLM, no prompt data leaves your perimeter. All inferences happen on infrastructure you control.

This means:

- You meet your internal privacy standards

- You avoid data leakage risks

- You keep your IP (and your customers’ data) where it belongs

Regulatory Compliance and Risk Management

If your business operates under GDPR, HIPAA, SOC 2, or industry-specific data governance policies, you already know the risk of sending data to third-party AI platforms.

A private LLM:

- Lets you manage data residency

- Enables granular audit logging

- Avoids third-party subprocessor entanglements

- Supports AI governance policies for high-risk industries

Domain-Specific Customization

Public models are trained on generic data (Reddit, Wikipedia, web forums). That’s useful — until it’s not.

Private LLMs are different. You can fine-tune them on:

- Industry-specific terminology

- Internal research reports

- Policy manuals, contracts, case logs

- Client-specific data (if approved)

This results in:

- Better model accuracy

- Lower hallucination rates

- A system that understands your language, your context, and your workflows

Performance, Reliability, and Control

LLM infrastructure should be predictable — not subject to API rate limits, region outages, or unpredictable pricing. With private deployment:

- You run the model in your cloud or data center

- You scale it based on usage patterns

- You optimize latency based on user geography

Enterprise teams value performance SLAs, not generic usage quotas.

Private LLMs vs Public LLMs: A Quick Comparison

| Feature | Public LLMs | Private LLMs |

|---|---|---|

| Data Control | External APIs; limited visibility | Full control over data, infra, access |

| Security & Governance | Third-party access risk | Integrated with your policies and IAM |

| Compliance Alignment | Vendor-dependent | Designed for regulatory compatibility |

| Customization | General-purpose | Fine-tuned on domain-specific data |

| Latency & Reliability | External network dependent | Localized inference, lower latency |

| Total Cost of Ownership | Usage-based; can spike | Predictable infrastructure cost |

Use Cases for Private LLMs in the Enterprise

Here’s where private LLMs are already delivering real-world value:

🔹 Market Intelligence and Data Firms

- Analyze proprietary datasets + public sources

- Extract insights from news, earnings calls, research archives

- Keep client and source data protected from leaks

🔹 Consulting & Professional Services

- Draft deliverables and frameworks securely

- Generate insights from client data (under NDA)

- Power internal search across previous engagements

🔹 Healthcare & Life Sciences

- Summarize clinical trial data

- Draft care plan documentation

- Enable on-prem AI assistance that respects PHI privacy

Deploying Private LLMs: Build vs Partner

Standing up a private LLM involves more than a Hugging Face repo and a Kubernetes cluster.

You’ll need:

- GPU infrastructure (on-prem or in a VPC)

- Model selection and fine-tuning strategy

- Retrieval-Augmented Generation (RAG) pipelines

- Workflow integration and access control

- Monitoring, logging, and governance

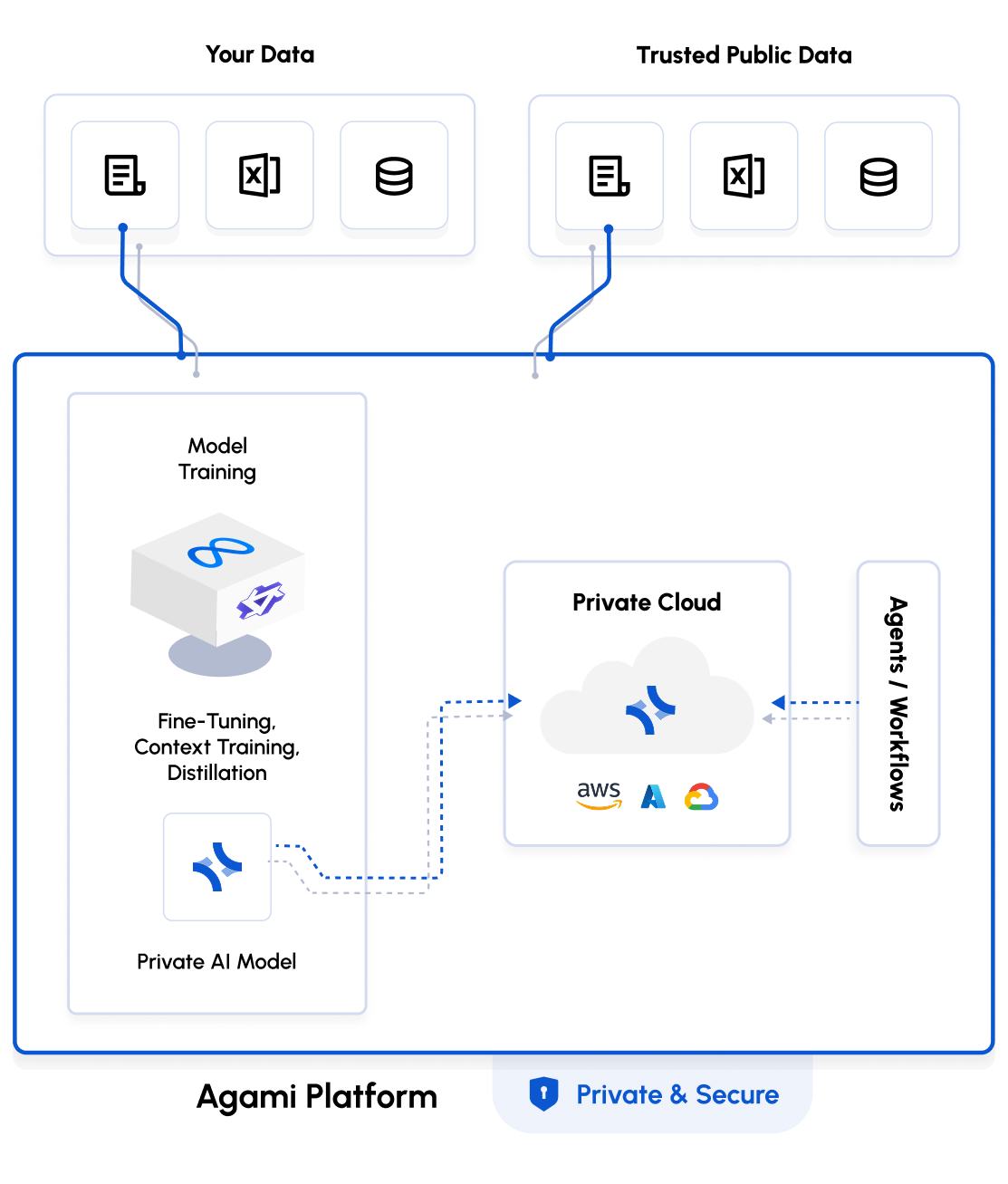

Or — you can partner with a platform like Agami, which offers:

- Contextual private LLMs tuned to your domain

- Secure deployment in your cloud or on-prem environment

- Workflow integrations for real business use cases

- Access governance, agent orchestration, and observability baked in

It’s a faster, cleaner way to get to production-grade private AI without assembling all the parts from scratch.

The Bottom Line

Private LLMs are more than a security blanket. They’re the foundation for serious enterprise AI.

If you want AI that’s:

✅ Accurate on your data

✅ Compliant with your policies

✅ Controlled by your team

✅ Embedded in your workflows

… then public APIs won’t get you all the way there.

Private LLMs deliver on the three pillars we see driving enterprise AI success:

▶︎ Privacy-first architecture

▶︎ Contextual accuracy

▶︎ Workflow integration

🚀 Curious Where Your Organization Stands?

Try our free AI Opportunity Assessment — and get a clearer sense of where a private LLM fits in your roadmap.

Frequently Asked Questions (FAQ)

1. What is a Private LLM in enterprise AI?

A Private LLM is a generative AI model deployed within an enterprise’s own infrastructure like a VPC or on-premise, ensuring privacy, control, and compliance.

2. Why are enterprises moving from public to private LLMs?

To avoid data exposure risks, meet regulations like GDPR and HIPAA, and gain control over model customization and governance.

3. Can a Private LLM be fine-tuned on company data?

Yes — Private LLMs can be trained on internal data like reports and knowledge bases for accurate, domain-specific performance.

4. What infrastructure is needed to deploy a Private LLM?

GPU-enabled servers (on-premise or VPC), orchestration tools, secure storage, and optionally a platform like Agami for deployment support.

5. Is a Private LLM better for compliance with data privacy laws?

Yes — while it doesn’t guarantee compliance alone, it offers a secure architecture that aligns with GDPR, HIPAA, and SOC 2 requirements.

#PrivateLLM #PrivateAI #OpenSourceLLM